印

NovelVizAI

AI Engineer

Impact

Solved "Reader's Fog" with multi-stage NLP pipeline (NER, Sentiment, Summarization).

Overview

The Problem: "Reader's Fog"

Have you ever returned to a 500-page fantasy epic after a week-long break, only to realize you’ve forgotten who that one minor character is or why the protagonist is suddenly in a dungeon? At NovelVizAI, we call this the "Reader's Fog"—a continuity gap that breaks immersion and turns reading into "work."

The Solution: An AI Reading Companion

Our team—avid readers and AI enthusiasts—built NovelVizAI, a sophisticated AI Buddy designed to tracks emotional arcs, map complex characters, and provide instant summaries. It transforms reading from a memory test back into an immersive experience.

Figure: NovelVizAI Dashboard

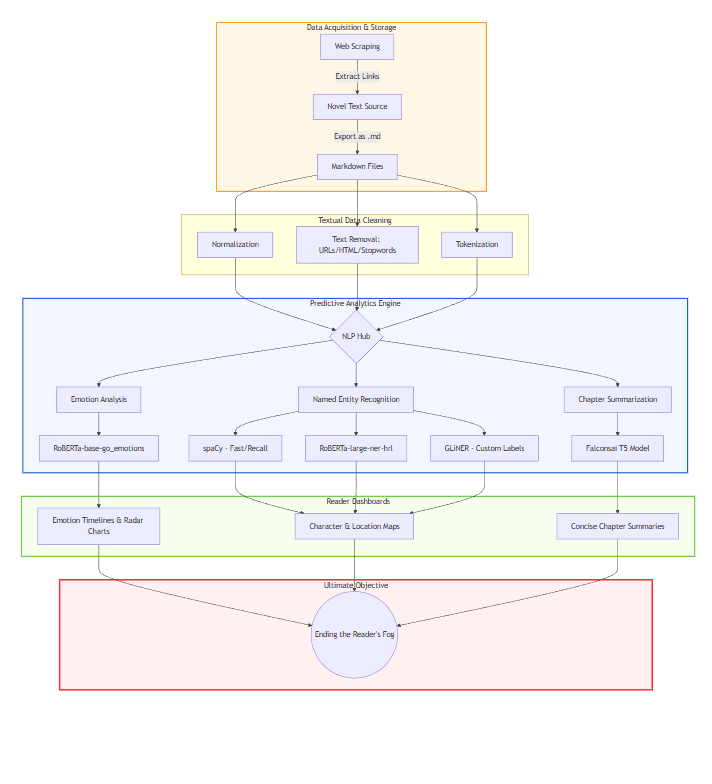

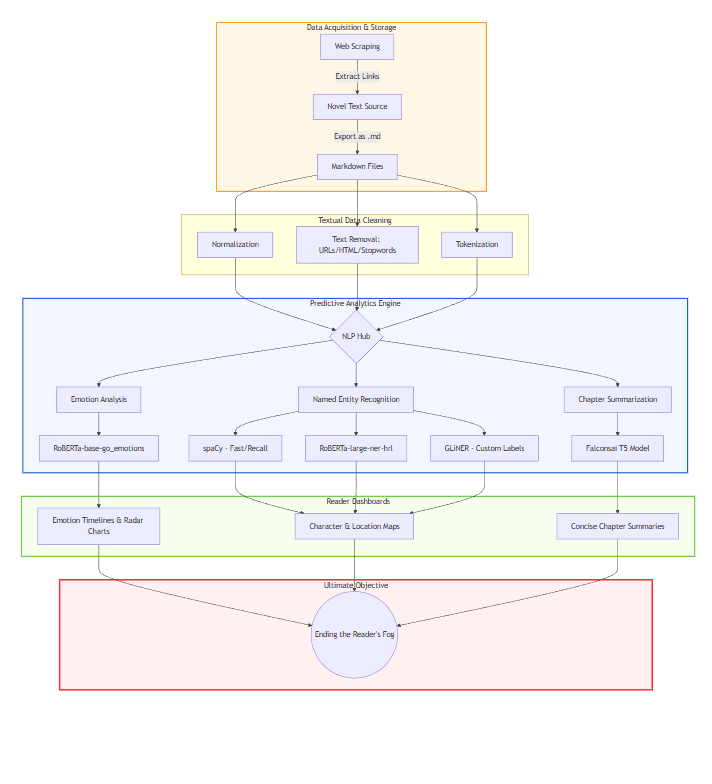

Technical Architecture

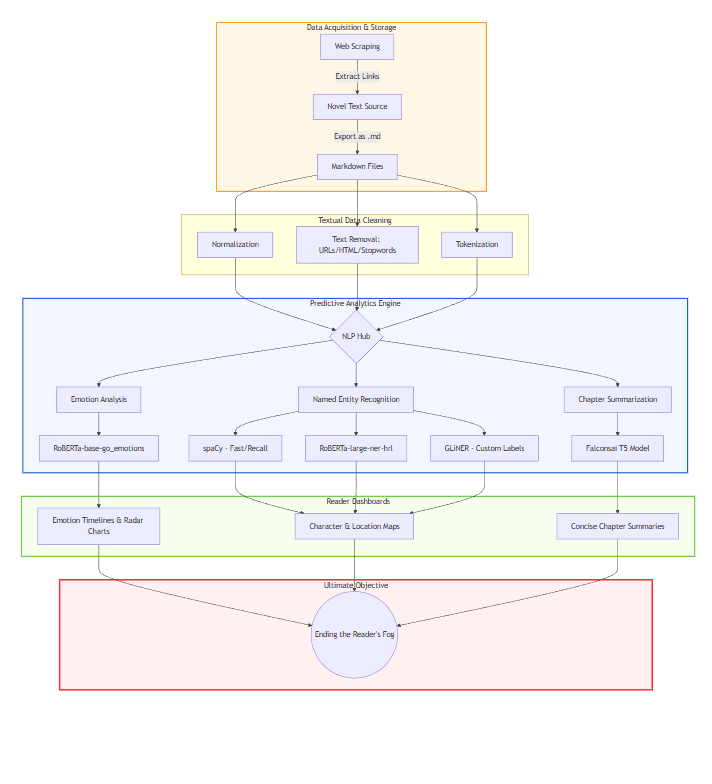

To bridge the continuity gap, we developed a multi-stage pipeline that transforms raw novel text into actionable insights.

Figure: System Architecture

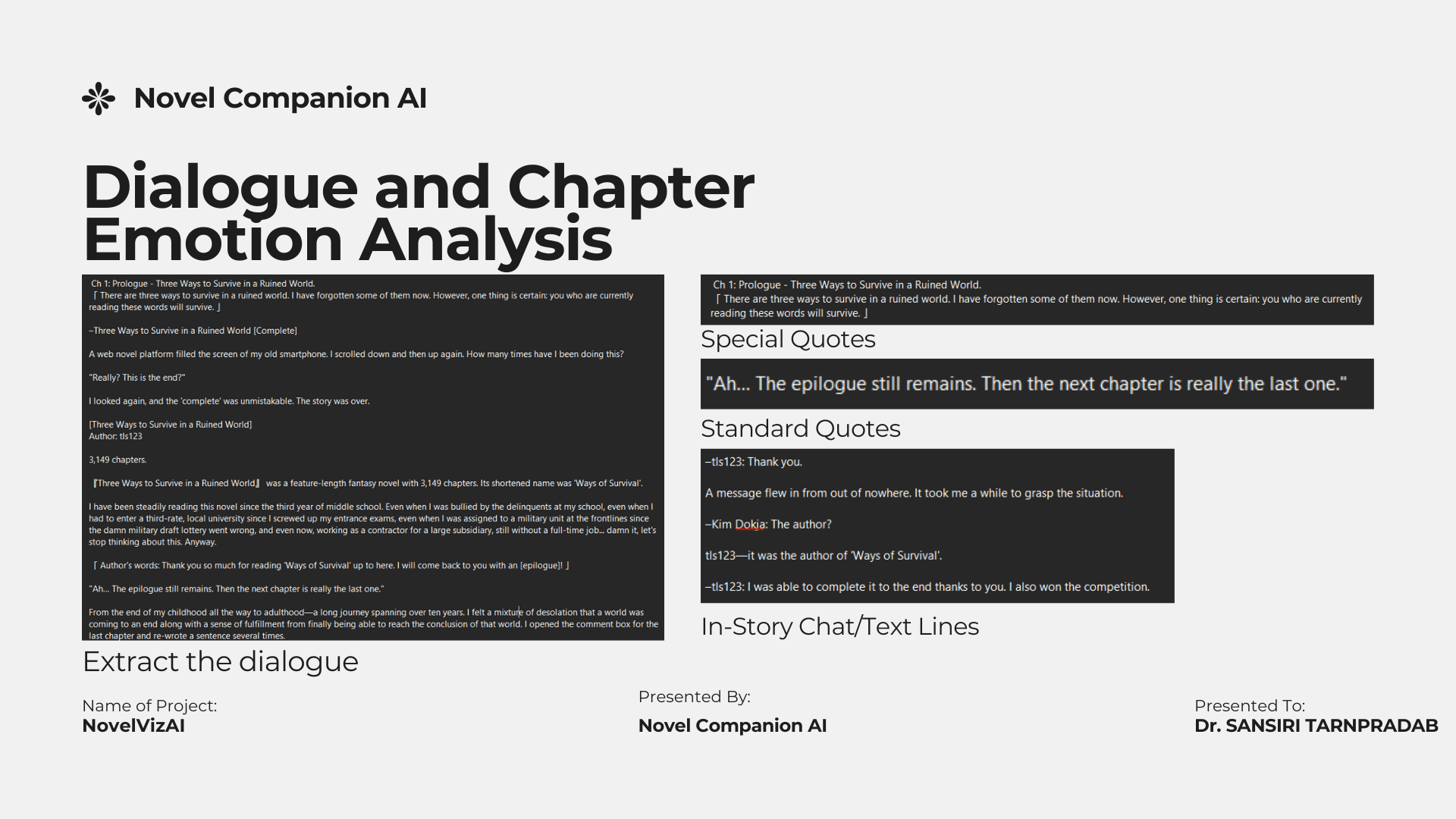

1. Smart Data Ingestion & Preprocessing

Using advanced web scraping, we ingest long-form fiction (e.g., Omniscient Reader's Viewpoint) and convert it into structured Markdown.

- Normalization: Removal of HTML tags, extra whitespaces, and non-standard characters.

- Tokenization: Splitting text into analyzable chunks for downstream models.

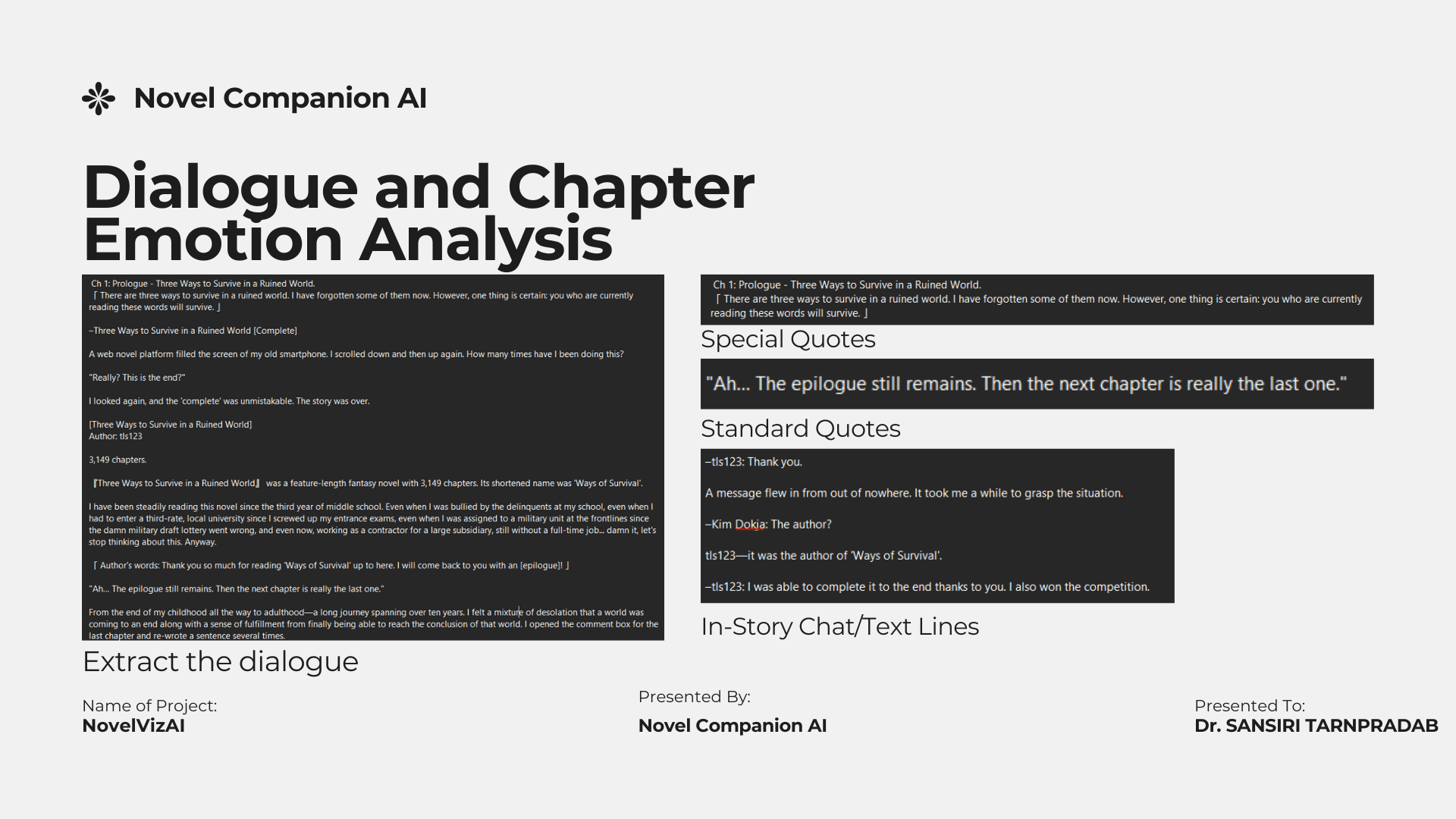

Figure: Dialogue Extraction

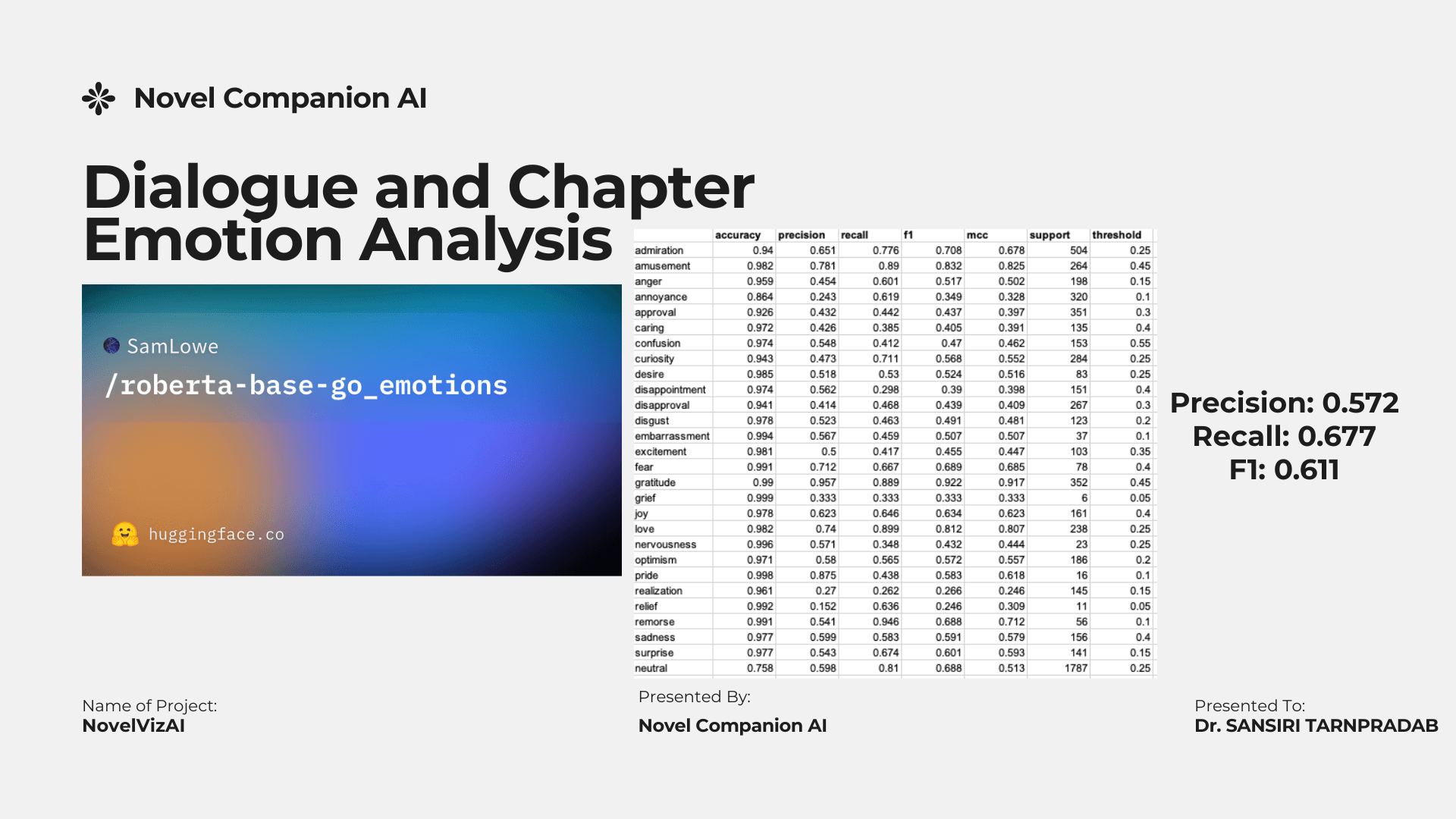

2. Emotional Arc Tracking (Sentiment Analysis)

We don't just read the plot; we track the feeling.

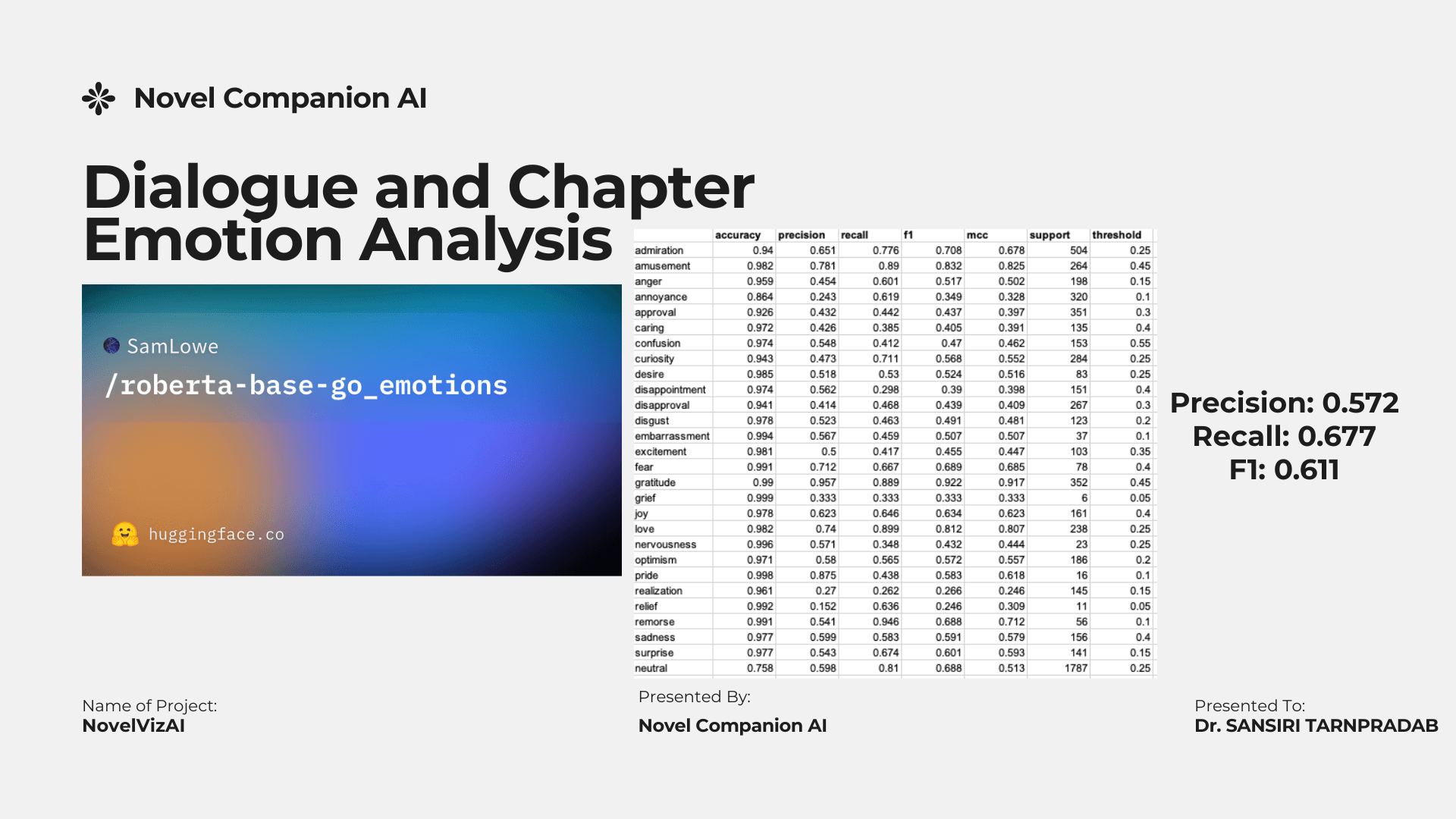

- Model: roberta-base-go_emotions

- Capabilities: Distinguishes between 28 nuanced emotions (Admiration, Optimism, Grief, etc.) rather than just positive/negative.

- Performance: Achieved a Neutral frequency of 54.9% in tests, effectively capturing the grounded narrative tone of long-form fiction.

Figure: Emotional Analysis

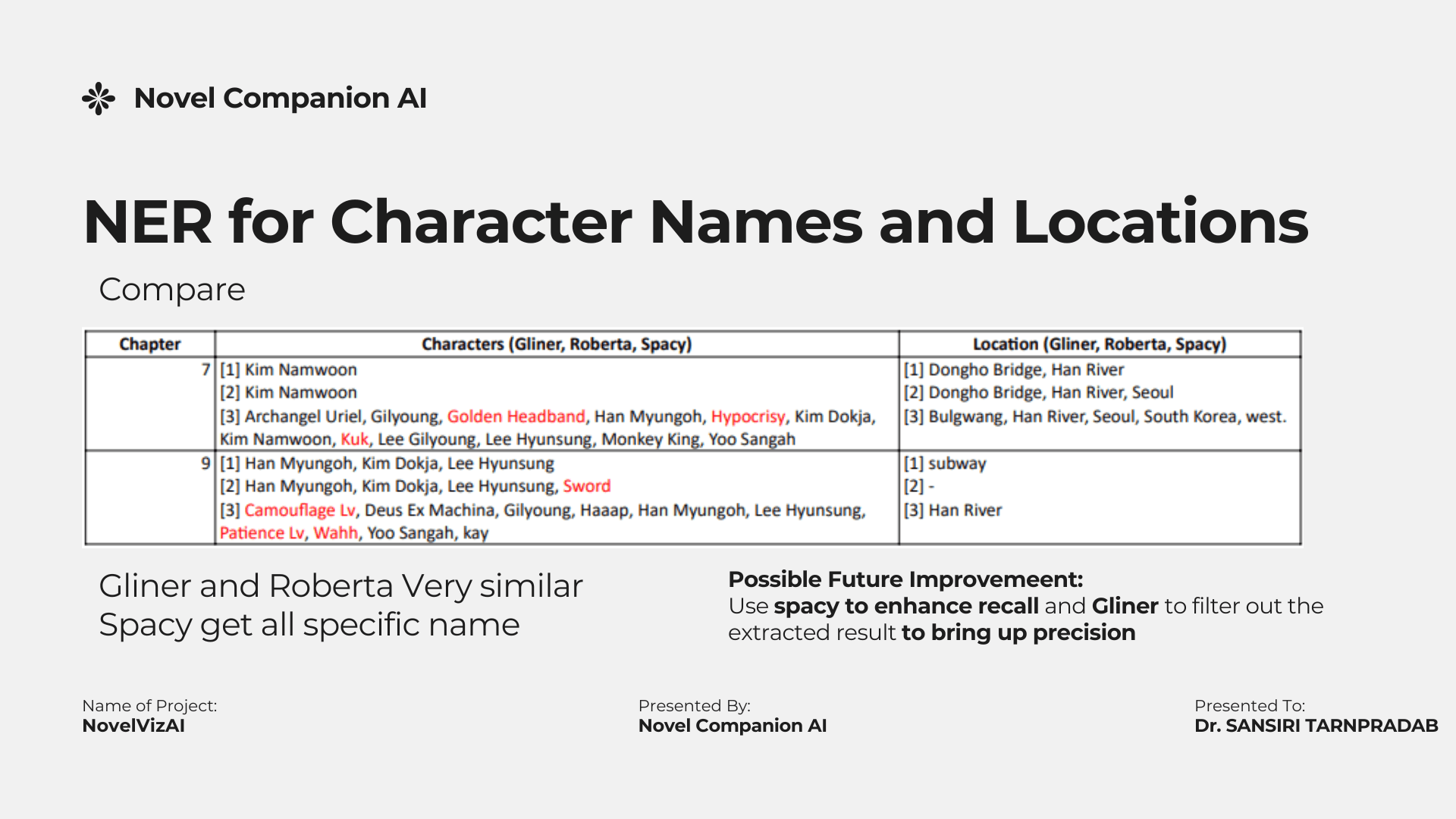

3. Deep Entity Recognition (NER)

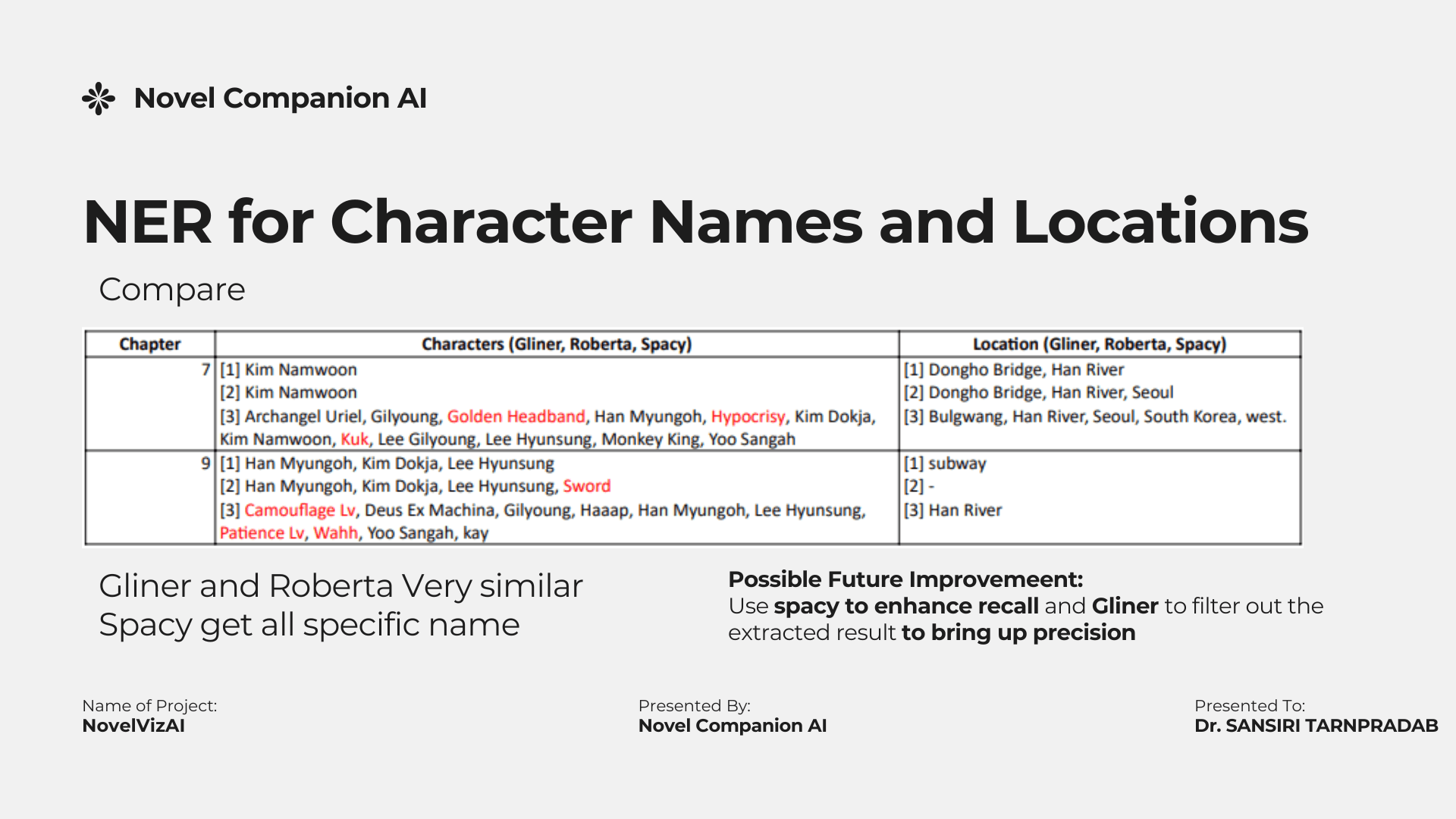

Solving the "Who is this?" problem required precise character tracking. We benchmarked three models:

- Spacy: Fast but lower recall on fantasy names.

- Roberta-large-ner-hrl: High precision.

- Gliner: Exceptionally precise at identifying specific names like Kim Namwoon or Dongho Bridge.

- Strategy: We employ a hybrid approach—using Spacy for high-recall filtering and Gliner/Roberta for high-precision entity resolution.

Figure: NER Extraction

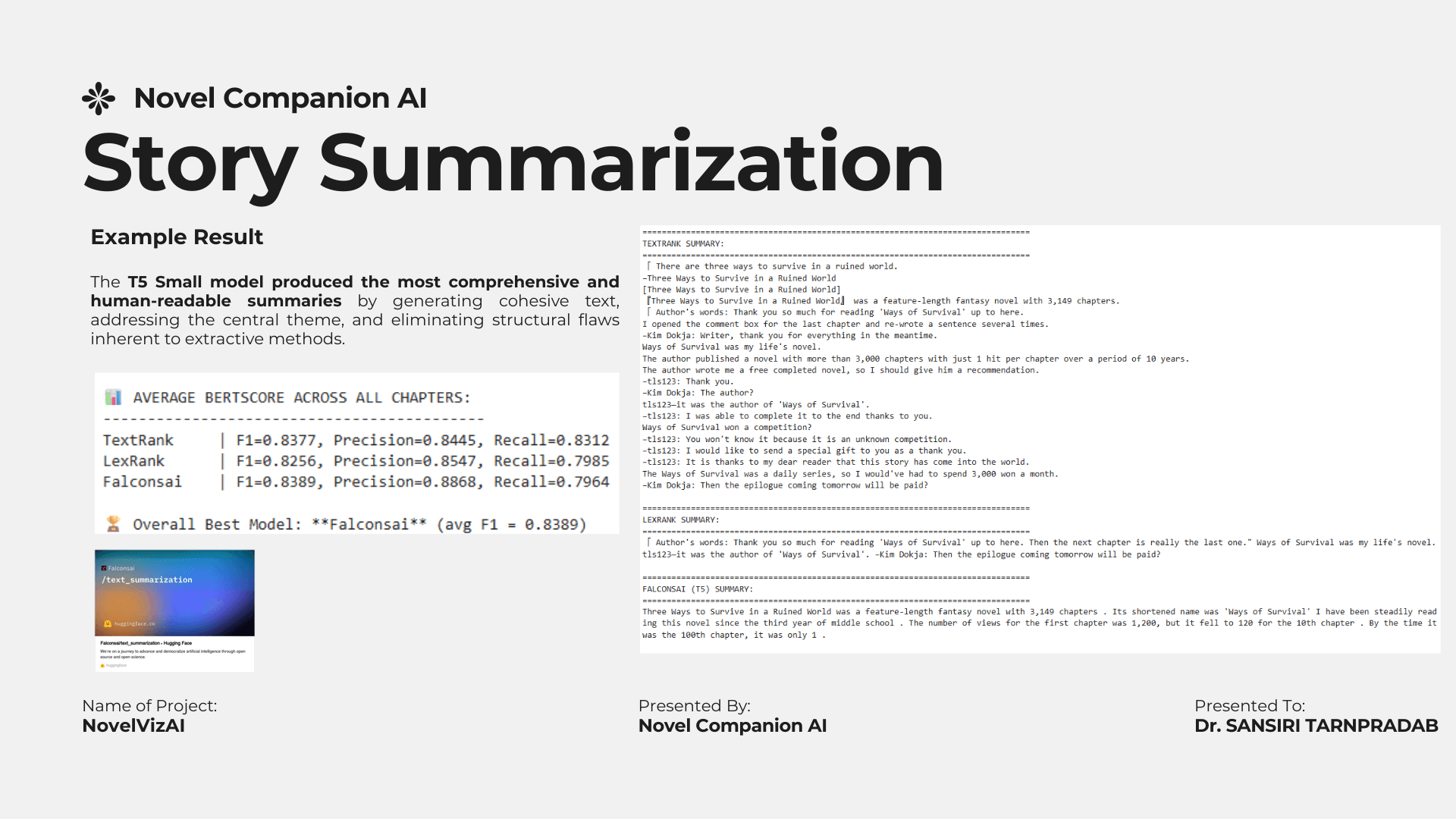

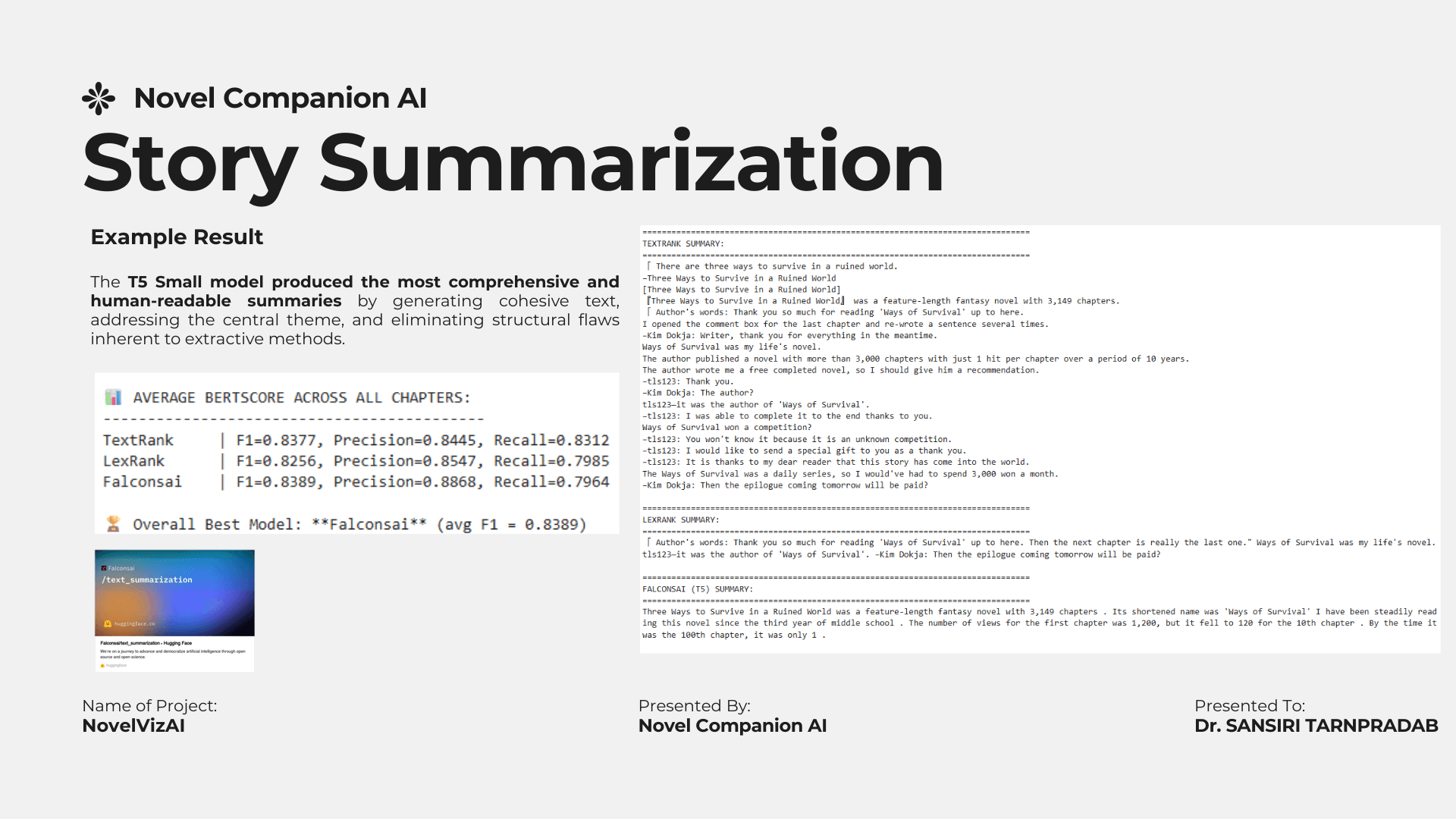

4. Agentic Summarization

We employed Large Language Models (LLMs) to generate concise, ~50-word summaries for every chapter.

- Benchmarking: Compared LexRank, TextRank, and Falconsai.

- Winner: Falconsai emerged as the champion with an impressive average F1 score of 0.8389, delivering the most coherent and context-aware summaries.

Figure: Summarization Result

Why This Matters

NovelVizAI demonstrates how NLP and Agentic AI can be applied to unstructured creative text to enhance user experience. It's not just about summarizing text; it's about preserving context and immersion in a way that respects the source material.

NLPTransformersSentiment AnalysisNER

Gallery Overview

Siwarat Laoprom © 2026